Meta and Oracle choose NVIDIA Spectrum-X for AI data centres

Meta and Oracle are upgrading their AI data centres with NVIDIA’s Spectrum-X Ethernet networking switches — technology built to handle the growing demands of large-scale AI systems. Both companies are adopting Spectrum-X as part of an open networking framework designed to improve AI training efficiency and accelerate deployment across massive compute clusters.

Jensen Huang, NVIDIA’s founder and CEO, said trillion-parameter models are transforming data centres into “giga-scale AI factories,” adding that Spectrum-X acts as the “nervous system” connecting millions of GPUs to train the largest models ever built.

Oracle plans to use Spectrum-X Ethernet with its Vera Rubin architecture to build large-scale AI factories. Mahesh Thiagarajan, Oracle Cloud Infrastructure’s executive vice president, said the new setup will allow the company to connect millions of GPUs more efficiently, helping customers train and deploy new AI models faster.

Meta, meanwhile, is expanding its AI infrastructure by integrating Spectrum-X Ethernet switches into the Facebook Open Switching System (FBOSS), its in-house platform for managing network switches at scale. According to Gaya Nagarajan, Meta’s vice president of networking engineering, the company’s next-generation network must be open and efficient to support ever-larger AI models and deliver services to billions of users.

Building flexible AI systems

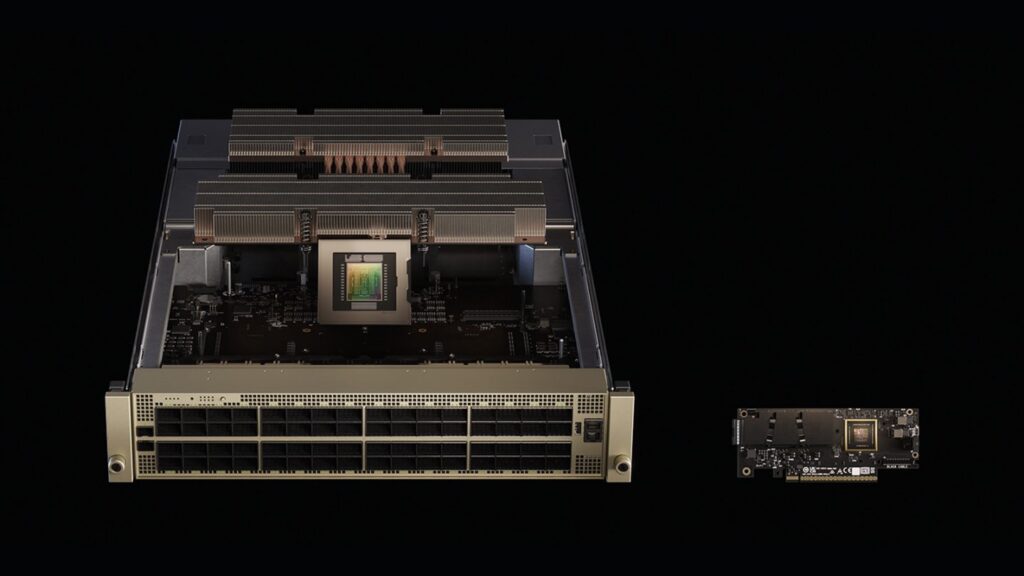

According to Joe DeLaere, who leads NVIDIA’s Accelerated Computing Solution Portfolio for Data Centre, flexibility is key as data centres grow more complex. He explained that NVIDIA’s MGX system offers a modular, building-block design that lets partners combine different CPUs, GPUs, storage, and networking components as needed.

The system also promotes interoperability, allowing organisations to use the same design across multiple generations of hardware. “It offers flexibility, faster time to market, and future readiness,” DeLaere said to the media.

As AI models become larger, power efficiency has become a central challenge for data centres. DeLaere said NVIDIA is working “from chip to grid” to improve energy use and scalability, collaborating closely with power and cooling vendors to maximise performance per watt.

One example is the shift to 800-volt DC power delivery, which reduces heat loss and improves efficiency. The company is also introducing power-smoothing technology to reduce spikes on the electrical grid — an approach that can cut maximum power needs by up to 30 per cent, allowing more compute capacity within the same footprint.

Scaling up, out, and across

NVIDIA’s MGX system also plays a role in how data centres are scaled. Gilad Shainer, the company’s senior vice president of networking, told the media that MGX racks host both compute and switching components, supporting NVLink for scale-up connectivity and Spectrum-X Ethernet for scale-out growth.

He added that MGX can connect multiple AI data centres together as a unified system — what companies like Meta need to support massive distributed AI training operations. Depending on distance, they can link sites through dark fibre or additional MGX-based switches, enabling high-speed connections across regions.

Meta’s AI adoption of Spectrum-X reflects the growing importance of open networking. Shainer said the company will use FBOSS as its network operating system but noted that Spectrum-X supports several others, including Cumulus, SONiC, and Cisco’s NOS through partnerships. This flexibility allows hyperscalers and enterprises to standardise their infrastructure using the systems that best fit their environments.

Expanding the AI ecosystem

NVIDIA sees Spectrum-X as a way to make AI infrastructure more efficient and accessible across different scales. Shainer said the Ethernet platform was designed specifically for AI workloads like training and inference, offering up to 95 percent effective bandwidth and outperforming traditional Ethernet by a wide margin.

He added that NVIDIA’s partnerships with companies such as Cisco, xAI, Meta, and Oracle Cloud Infrastructure are helping to bring Spectrum-X to a broader range of environments — from hyperscalers to enterprises.

Preparing for Vera Rubin and beyond

DeLaere said NVIDIA’s upcoming Vera Rubin architecture is expected to be commercially available in the second half of 2026, with the Rubin CPX product arriving by year’s end. Both will work alongside Spectrum-X networking and MGX systems to support the next generation of AI factories.

He also clarified that Spectrum-X and XGS share the same core hardware but use different algorithms for varying distances — Spectrum-X for inside data centres and XGS for inter–data centre communication. This approach minimises latency and allows multiple sites to operate together as a single large AI supercomputer.

Collaborating across the power chain

To support the 800-volt DC transition, NVIDIA is working with partners from chip level to grid. The company is collaborating with Onsemi and Infineon on power components, with Delta, Flex, and Lite-On at the rack level, and with Schneider Electric and Siemens on data centre designs. A technical white paper detailing this approach will be released at the OCP Summit.

DeLaere described this as a “holistic design from silicon to power delivery,” ensuring all systems work seamlessly together in high-density AI environments that companies like Meta and Oracle operate.

Performance advantages for hyperscalers

Spectrum-X Ethernet was built specifically for distributed computing and AI workloads. Shainer said it offers adaptive routing and telemetry-based congestion control to eliminate network hotspots and deliver stable performance. These features enable higher training and inference speeds while allowing multiple workloads to run simultaneously without interference.

He added that Spectrum-X is the only Ethernet technology proven to scale at extreme levels, helping organisations get the best performance and return on their GPU investments. For hyperscalers such as Meta, that scalability helps manage growing AI training demands and keep infrastructure efficient.

Hardware and software working together

While NVIDIA’s focus is often on hardware, DeLaere said software optimisation is equally important. The company continues to improve performance through co-design — aligning hardware and software development to maximise efficiency for AI systems.

NVIDIA is investing in FP4 kernels, frameworks such as Dynamo and TensorRT-LLM, and algorithms like speculative decoding to improve throughput and AI model performance. These updates, he said, ensure that systems like Blackwell continue to deliver better results over time for hyperscalers such as Meta that rely on consistent AI performance.

Networking for the trillion-parameter era

The Spectrum-X platform — which includes Ethernet switches and SuperNICs — is NVIDIA’s first Ethernet system purpose-built for AI workloads. It’s designed to link millions of GPUs efficiently while maintaining predictable performance across AI data centres.

With congestion-control technology achieving up to 95 per cent data throughput, Spectrum-X marks a major leap over standard Ethernet, which typically reaches only about 60 per cent due to flow collisions. Its XGS technology also supports long-distance AI data centre links, connecting facilities across regions into unified “AI super factories.”

By tying together NVIDIA’s full stack — GPUs, CPUs, NVLink, and software — Spectrum-X provides the consistent performance needed to support trillion-parameter models and the next wave of generative AI workloads.

(Photo by Nvidia)

See also: OpenAI and Nvidia plan $100B chip deal for AI future

Want to learn more about AI and big data from industry leaders? Check out AI & Big Data Expo taking place in Amsterdam, California, and London. The comprehensive event is part of TechEx and is co-located with other leading technology events, click here for more information.

AI News is powered by TechForge Media. Explore other upcoming enterprise technology events and webinars here.